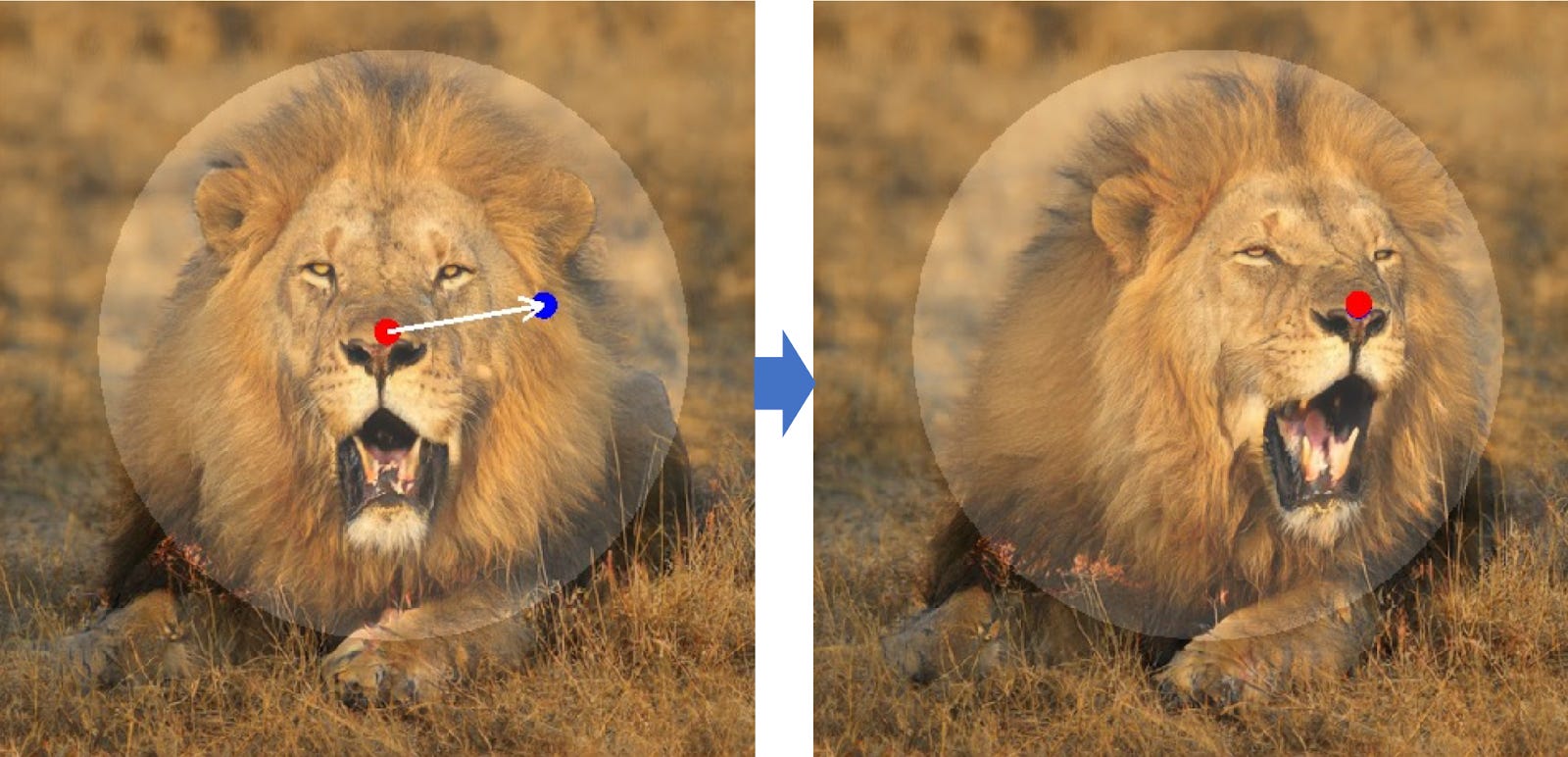

This work proposes a new approach called DragGAN for controlling generative adversarial networks (GANs) in a user-interactive manner. It consists of a feature-based motion supervision and a new point-tracking approach that leverages the discriminative GAN features. The proposed method allows for precise control over the pose, shape, expression, and layout of diverse categories such as animals, cars, humans, landscapes, etc. Both qualitative and quantitative comparisons demonstrate the advantage of DragGAN over prior approaches in the tasks of image manipulation and point tracking. The method is capable of producing realistic outputs even for challenging scenarios such as hallucinating occluded content and deforming shapes that consistently follow the object’s rigidity. The authors also showcase the manipulation of real images through GAN inversion.

Paper Link : https://arxiv.org/abs/2305.10973